The Agentic Spectrum: Why It’s Not Agents vs Workflows

Teams building agentic systems for real-world applications face a common challenge: how to move from demos to production without rewriting everything later. Well-understood distributed systems problems are reappearing under new labels like graphs, memory (state), and tool usage (function calling), and design discussions remain framed around false choices: agents or workflows. While new frameworks emphasize autonomy and look promising in prototypes, they often fall short in addressing core production needs like reliability, observability, and security.

In practice, production systems need both structure and adaptability. You need workflows to ensure consistency and traceability, and agents to handle ambiguity and dynamic decision making. Looking ahead, expect additional requirements like model-agnostic design, hybrid cloud deployment, observability, and durability to come to the forefront. Planning for these now through a shared runtime—one that spans both agents and workflows, and integrates with existing application workloads—will determine how maintainable and future-proof your system is.

How Much Autonomy?

Remember the microservices debate around service granularity? Teams spent months deciding how small a service should be, only to realize later that granularity wasn't the core issue. What mattered more was clear team ownership, data boundaries, and well-defined API contracts that encapsulate implementation details.

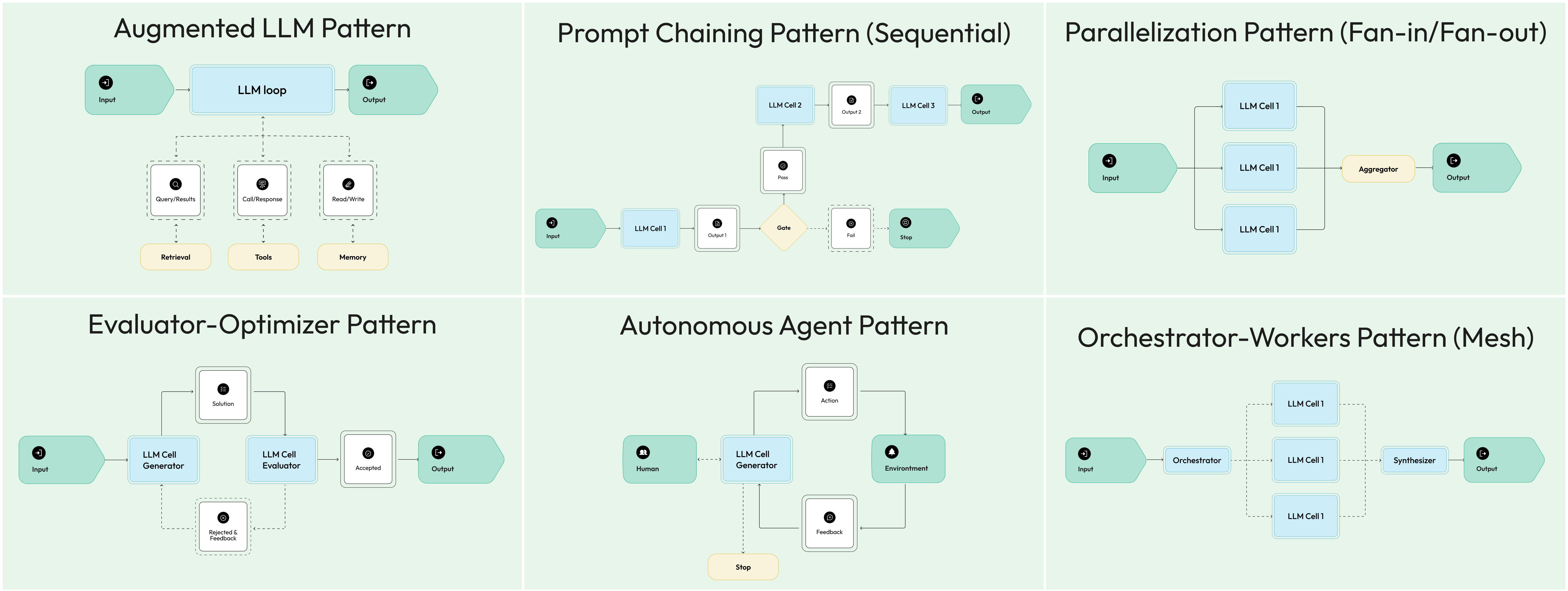

Andrew Ng, one of the most influential voices in AI, recently reframed the conversation by shifting from binary thinking to spectrum thinking. Instead of asking "Is this an agent?" he suggests asking "How agentic is this system?" This change matters. The noun "agent" pushes teams into rigid classification, while the adjective "agentic" opens up a range of possibilities. Most real-world systems fall somewhere between a single prompt and a fully autonomous, tool-using agent. Anthropic extended this definition with a useful architectural distinction. Workflows are systems where LLMs and tools follow fixed, predefined paths. Agents are systems where LLMs dynamically decide what steps to take. This defines a spectrum: workflows offer structure and predictability; agents offer autonomy and adaptability.

A customer support system makes this tangible. You might use a workflow to route incoming tickets based on predefined rules. Once routed, an agent can take over, selecting tools, accessing knowledge bases, and generating personalized responses. The workflow enforces consistency. The agent delivers adaptability.

This reflects a foundational tradeoff in system design. Sometimes you need predictability for compliance, operational reliability, or user trust. Other times, autonomous decision-making is essential for handling ambiguity or dynamic inputs. And more often, you need to combine both: coordinating intelligent agents through a structured workflow. The goal is not to choose between workflows and agents, but to integrate them through a shared programming and operational model. There is also a third dimension to consider: non-functional requirements (NFRs) like durability, reliability, and so forth. Operational concerns apply equally across the spectrum: systems must run with consistent reliability, shared state management, and unified observability. The usability tradeoffs are part of the equation.

Workflows as the Backbone of Agentic Systems

When you're prototyping with LLMs, chaining a few API calls in a script feels sufficient. But the moment you need to handle real business processes such as error recovery, execution tracking, and failure resumption, it becomes clear that calling the LLM API is not enough. This is where durable workflows become essential.

Traditional workflows engines excel at orchestrating distributed systems, handling failures gracefully, and maintaining state across long-running tasks. These same patterns apply directly to LLM interactions. Instead of coordinating microservices, you're orchestrating AI tasks, where some are as simple as a prompt, others are agents with tools and memory. Dapr Workflows provides exactly this foundation. Built on proven distributed systems patterns, it brings enterprise-grade reliability to AI orchestration. Each step in your AI workflow becomes a durable activity that can be retried, recovered, and audited. Durable here means that all the execution state is retained making it recoverable in the event of failures and retries.

Here’s a basic workflow example combining three core patterns:

@workflow(name="content_approval_workflow")

def content_approval(ctx: DaprWorkflowContext, content_request: str):

# First task: Generate initial content

draft_content = yield ctx.call_activity(generate_content, input=content_request)

# Human-in-the-loop: Wait for approval or timeout after 24h

approval_event = yield ctx.wait_for_external_event("content_approved")

timeout_event = yield ctx.create_timer(timedelta(hours=24))

# Durable timer - survives restarts

winner = yield ctx.when_any([approval_event, timeout_event])

if winner == timeout_event:

return "Content approval expired"

# Second task: Finalize and format the approved content

final_content = yield ctx.call_activity(finalize_content, input=draft_content)

return final_content

@task(description="Generate a marketing draft for: {content_request}")

def generate_content(content_request: str) -> str:

pass

@task(description="Format and finalize the content: {draft_content}")

def finalize_content(draft_content: str) -> str:

pass

This illustrates three essential patterns that call LLMs:

- Task chaining decomposes complex work into sequential steps, where each LLM call processes the output of the previous one. If the finalization step fails, the workflow resumes from the failure point with the same draft content rather than restarting the entire process.

- Human-in-the-loop introduces approval gates using external event handling. The workflow can pause indefinitely while waiting for human input, survive system restarts, and continue exactly where it left off once approval arrives.

- Timeout and scheduling enable long-running tasks with durable timers. You can schedule workflows to run at specific times, enforce expiration deadlines, or implement SLA-based processing, all while maintaining state consistently across system boundaries.

Dapr Agents extend Anthropic’s agentic patterns, such as prompt chaining, routing, parallelization, and orchestrator-worker loops. They add durability, support external interactions, manage failure recovery through compensation, and maintain reliable execution across tasks.

As teams adopt these techniques, new hybrid patterns are emerging that combine LLM intelligence with battle-tested workflow orchestration. Workflows do not limit what LLMs can do. They provide the structure and operational guarantees needed to run intelligent systems in production with confidence.

Running Agentic Workflows with Confidence

The workflows covered earlier represent one end of the agentic spectrum, where developers define the steps and LLMs execute tasks within a fixed structure. But what happens when the agent needs to decide those steps on its own?

This is where agentic workflows come in. IBM describes the term as AI-driven processes where autonomous agents make decisions, take actions, and coordinate tasks with minimal human input. Unlike traditional workflows with hardcoded logic, agentic workflows allow the LLM to determine what to do, when to do it, and how to adapt based on outcomes. This shift introduces a critical challenge: reliability. While agent frameworks enable autonomy, they often lack the operational guarantees needed in production, such as durability, failure handling, and auditability.

Dapr Agents’ AssistantAgent addresses this gap by combining agent autonomy with workflow-grade reliability. Here's what makes this approach unique:

travel_planner = AssistantAgent(

name="TravelBuddy",

role="Travel Planner",

goal="Help users find flights and remember preferences",

instructions=[

"Find flights to destinations",

"Remember user preferences",

"Provide clear flight info"

],

tools=[search_flights],

state_store_name="workflowstatestore",

agents_registry_store_name="registrystatestore",

memory=ConversationDaprStateMemory(store_name="conversation"))

# Exposes REST endpoints for workflow management

travel_planner.as_service(port=8001)

await travel_planner.start()

Behind the scenes, each new interaction becomes a durable workflow instance. For example, when a user says, "Find me flights to Paris and remember I prefer window seats," the agent doesn't just respond. It creates a persistent workflow that:

- Maintains continuity across conversations

- Survives crashes and restarts

- Tracks progress with action states

- Recovers from tool failures through retries and compensation

- Produces audit trails for transparency and debugging

Many agentic frameworks force a tradeoff: either use simple agents that are stateless, or use a complex workflow engine. With `AssistantAgent`, static and dynamic flows share a common runtime with consistent retry behavior, execution tracking, and observability. If one tool call fails in a multi-step agent interaction, the system resumes without losing context or restarting. You get agent intelligence with enterprise reliability.

This offers a unified architecture where developers use a single programming model, SREs operate a single system, reliability and visibility are consistent, whether the process is driven by LLM reasoning or procedural logic. This is the convergence point of the agentic spectrum: autonomous behavior executed with production-grade discipline.

Bridging AI-Native and Cloud-Native

As teams build beyond basic LLM interactions, they face a core decision: use a general-purpose distributed system framework or adopt an AI-native one. Each path leads to different tradeoffs and operational models.

Workflow engines like Airflow, Temporal, Netflix Conductor, and Cadence provide mature building blocks for orchestrating distributed systems. But these systems were not designed for AI workloads. They lack first-class support for prompts, memory, tool usage, or autonomous agents. As a result, teams must bolt on AI-specific capabilities on top of infrastructure designed for traditional business logic.

On the other side, AI-native frameworks like LangGraph, CrewAI, and Autogen come with abstractions for memory, tool calling, agent orchestration, and reasoning loops. These are powerful out of the box, but they are constrained for Agentic-only use cases. They often reinvent distributed systems patterns with new terminology and lack the broad infrastructure connectivity. Workflows become graphs with nodes and edges, state becomes memory, service APIs become tool calls, and process replays become “human-on-the-loop”, and so forth.

Building an agentic system is fundamentally building a distributed system that uses LLMs with underlying concerns remaining the same. All the classic cloud-native patterns still apply: asynchronous messaging, state coordination, API composition, service discovery, and resilience. In fact, these needs are amplified as an agentic system iterates undeterministically back and forth between LLMs and using tools. This is where Dapr Agents takes a different approach. It does not attempt to create a parallel infrastructure stack for AI. Instead, it adds a minimal agentic layer on top of Dapr’s battle-tested distributed systems runtime. It reuses the same APIs trusted in production: pub/sub messaging, persistent state stores, service invocation, and observability. Agent messages are delivered over standard messaging protocols such as HTTP and gRPC. Agent memory is backed by existing databases. Agent workflows are executed by the same durable workflow engine that runs business logic. The observability events are provided through OpenTelemetry. Security is also at the hear of Dapr, which will become critical in these tool calling and multi-agent systems.

Dapr Agents lets you build intelligence without duplicating your infrastructure, reduces conceptual overhead, avoids redundant patterns, and lets your AI stack inherit the maturity and reliability of the microservices ecosystem.

Building Agentic Systems Without Lock-In

Not all open source is truly open. Many AI frameworks are single-vendor open source: public repos under tight control. These often evolve into open core once adoption reaches a critical mass. We’ve seen it happen with Redis, Elasticsearch, and MongoDB with initially permissive, then locked behind licenses and cloud terms projects. Open core models are not very different. The community edition (for example, the core agentic framework) is typically open, but then critical production features (such as observability) are gated. The result: developers can start building for free, but operations teams cannot operate reliably without buying in.

With SaaS platforms, lock-in is even tighter. For example, OpenAI’s Agents SDK is open source. But core features like trace visualization, state storage, and agent management are only available inside OpenAI’s cloud via the Assistants API. You get the SDK, but the infrastructure is locked in. Stateful agents make this risk worse: conversation history, preferences, and tool usage become trapped assets with no portability.

The OSS lock-in playbook is well understood. Whether disguised as open source or packaged as SaaS, most frameworks trade control for convenience and production systems pay the price.

Dapr Agents are inherently different. It builds on Dapr, a CNCF project designed from the ground up for openness, vendor neutrality, and longevity. Governed by a foundation rather, it ensures long-term sustainability and trust. Dapr Agents enables four core freedoms:

- Vendor neutrality by design: CNCF governance prevents unilateral licensing or roadmap changes.

- Infrastructure freedom: Swap components like Redis, PostgreSQL, Kafka, or CosmosDB without rewriting logic, and move workloads across clouds.

- Model portability: Switch between OpenAI, Anthropic, Claude, or custom models with no agent code changes.

- Observability choice: Use Prometheus, Grafana, Jaeger, or any OpenTelemetry-based stack without SaaS dependency.

Dapr Agents is an agentic layer on top of durable workflows, messaging, and state: the same patterns that power cloud-native microservices at scale. That is what an open, enterprise ready agentic abstraction should look like.

Build Agents You Can Extend, Operate, and Own

Developers shouldn’t need to learn a new vocabulary to build distributed systems. Operations teams shouldn’t need to adopt entirely new frameworks just to run reliable workflows. The foundational patterns for building and running scalable systems already exist and we don’t need to reinvent them under different names. Rather, we need to extend them with AI-specific needs.

This is the Dapr philosophy: build on proven, reliable infrastructure instead of creating parallel stacks. Dapr Agents extends that philosophy to agentic systems by integrating LLM into established patterns like workflows, state, and messaging, observability, and so forth.

Dapr Agents is evolving rapidly as the agentic systems landscape moves forward. Recent additions include support for the Model Context Protocol (MCP), and upcoming features like agent-to-agent (A2A) protocol are on the way.

We invite you to join the conversation on Dapr Discord, explore the Dapr Agents University Course, and try out Dapr Agents. Your feedback will help shape what comes next.

More blog posts

The State of Dapr 2025 Report

A comprehensive look into the evolution of Dapr and its impact on modern application development. Whether evaluating Dapr or looking to optimize an existing deployment, this report provides the data and context needed to make informed decisions.

.png)