Dapr 1.13 Release Highlights

The Dapr maintainers released a new version of Dapr, the distributed application runtime, last week. Dapr provides APIs for communication, state, and workflow, to build secure and reliable microservices. This post highlights the major new features and changes for the APIs and components in release v1.13. See below for a recent webinar detailing the updates described in this blog.

APIs

Dapr provides an integrated set of APIs for building microservices quickly and reliably. The new APIs, and major upgrades to existing APIs, are described in this section.

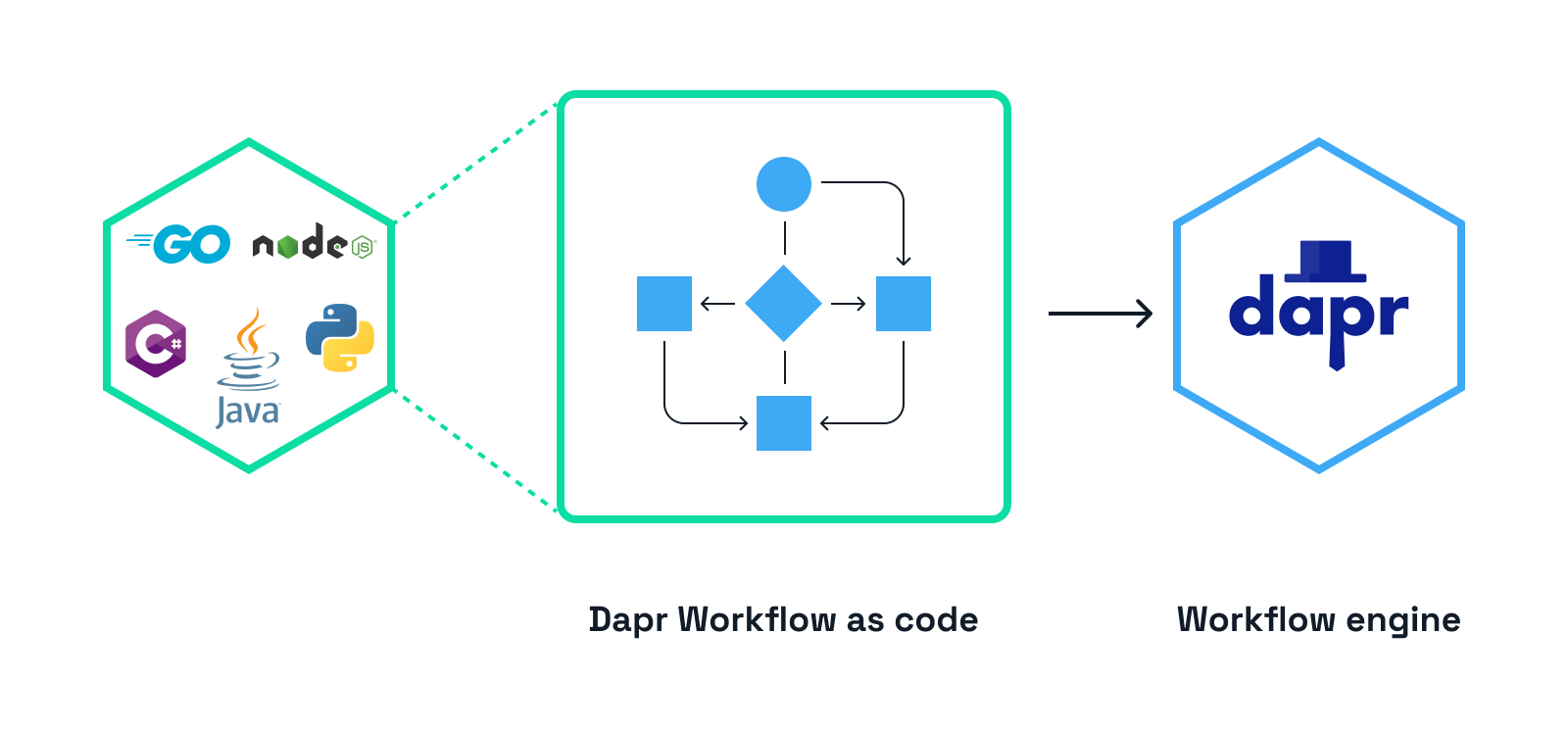

Author workflows in JavaScript/TypeScript and Go

Authoring Dapr workflows was already possible for .NET, Python, and Java. With v1.13 writing fault-tolerant, workflow-based apps with durable execution has been extended to the JavaScript and Go SDKs.

Here’s an example of a workflow in JavaScript that chains three activity calls in a sequence:

See the JavaScript SDK for more details and try the JavaScript Quickstart. Or see the Go SDK and try the Go Quickstart.

Author Actors in Rust

Rust can now be used to run Dapr Actors, a programming model for dealing with concurrency in highly scalable applications. Here’s an example of using Rust to write independent and isolated units of state and logic:

Actor support in the Rust SDK is in alpha. See the Rust SDK for more details.

Runtime

In release 1.13 many changes have been made to the Dapr runtime, making it more performant and reliable. This section lists the major changes.

Component hot reloading

Component hot reloading allows the Dapr runtime to pickup component updates without restarting the Dapr process. This feature really simplifies component changes for IT operations, since this avoids any manual interference with the Dapr process. Component hot reloading is a preview feature that can be enabled with the HotReload feature toggle.

When a component is updated, it is first closed, and then re-initialized using the new configuration. This causes the component to be unavailable for a short period of time during this process.

See the docs for more information on hot reloading.

Low metrics cardinality for HTTP

An optional setting is added to enable low cardinality for HTTP server metrics. Enabling this setting will result in less memory usage when dealing with high cardinality metrics.

To enable low metrics cardinality, set the spec.metrics.http.inceasedCardinality to false in the Dapr Configuration resource:

The increasedCardinality: false setting will be the default from Dapr v1.14 onwards. For more information, see the high cardinality metrics section in the docs.

Delay graceful shutdown

The Dapr runtime now has the ability to delay the graceful shutdown procedure. This can be used when applications need to use Dapr APIs for their shutdown procedure. The dapr.io/block-shutdown-duration annotation or --dapr-block-shutdown-duration flag is set for the number of seconds that blocks the graceful shutdown. The Dapr process will delay the shutdown for this duration or until the app reports unhealthy. More information on the block shutdown duration can be found in the docs.

Standardized error codes

The Dapr PubSub and State Management APIs now have standardized (and more user-friendly) error codes, including enriched error details based on gRPC's richer error model. The remaining Dapr APIs are a work in progress, and community contributions towards this effort are encouraged. See additional information on the error codes in the docs. Check the SDK docs for SDK specific error code parsing and handling. This is an example of the Go SDK error parsing and handling.

Old State Management HTTP API error message for an invalid key:

New State Management HTTP API message for an invalid key:

Improved Dapr Actor reminder performance

Actor reminders can now use protobuf serialization instead of JSON. This increases throughput, and reduces latency, and improves stability when multiple Dapr instances are operating on the same reminders. Using protobuf serialization is currently an opt-in feature and will become the default serialization setting in v1.14.

To enable protobuf serialization for actor reminders, set the following Helm argument on Kubernetes:

On self-hosted mode, run daprd with the flag:

Since this setting stores the reminders in a different format, it is recommended not to downgrade to an earlier version of Dapr once protobuf serialization is enabled, since the new format can’t be read by earlier Dapr versions.

Components

Dapr decouples the functionality of the integrated set of APIs with their underlying implementations via components. Components of the same type are interchangeable since they implement the same interface. Release 1.13 contains both new components as improvements to existing components.

New components

Local name resolver based on SQLite

SQLite can now be used as a name resolver for service invocation in self-hosted mode. This is a great alternative in case mDNS is blocked by corporate firewalls or VPNs.

This is the Dapr Configuration resources for using SQLite for name resolution:

See the docs for additional information.

Postgres state store v2

The Postgres state store component has a new v2 implementation, which has increased performance and reliability. The value data stored by this component is now of type BYTEA, which allows faster queries and, in some cases, is more space-efficient than the previously-used JSONB type.

New applications are encouraged to use v2. The v1 implementation remains supported and is not deprecated.

See the docs for additional info.

Azure Blob Storage state store v2

Azure Blob Storage state store component has a new v2 implementation which contains an important bug fix related to key prefixes (this new version now correctly respects the keyPrefix setting).

New applications are encouraged to use v2. The v1 implementation remains supported and is not deprecated.

See the docs for additional info.

Component improvements

Many component improvements have been made across several APIs, such as:

Bindings

Improved error messages for object not found error for AWS S3, Azure Blob Storage, GCP bucket and Huawei OBS.

Kafka: Added support for AWS IAM with AWS Kafka clusters.

Pub/Sub

AWS SQS: Fixed errors during Dapr shutdown and with message polling behavior.

GCP PubSub: Reduce unnecessary administrator calls when publishing to a GCP PubSub Topic.

- Added support for AWS IAM with AWS Kafka clusters.

- Added Avro schema registry support.

- Added message key and other metadata as metadata in consumer.

- Added more configuration options to control prefetching and concurrency.

- Redis: Added support for message metadata.

Secret stores

The AWS Secret Manager and AWS SSM Parameter Store components now validation their connection with AWS.

State stores

AWS DynamoDB: Added connection validation.

Azure Blob Storage (v1): Added disableEntityManagement metadata option to work with minimal Microsoft Entra ID permissions.

Azure CosmosDB: Added ability to pass explicit partition key in Query method.

Cassandra: Added TLS verification support via metadata option EnableHostVerification.

MS SQL Server / MySQL / PostgreSQL (v1) / SQLite: Improved performance of Multi invocations with 1 operation only.

MySQL & SQLite: Improved performance of Set operations with first-write-wins.

Redis: Fixed: numeric operators do not work correctly on large numbers when querying.

SQLite: Limit to 1 concurrent connection when connecting to an in-memory database.

Name resolver

Consul: Added local in-memory caching of Consul responses to make name resolution more performant.

Azure components

Allow Azure Auth order to be specified via azureAuthMethods component metadata.

What is next?

This post is not a complete list of features and changes released in version 1.13. Read the official Dapr release notes for more information. The release notes also contain information on how to upgrade to this latest version.

Excited about these features and want to learn more? I'll cover the new features in more detail in future posts. Until then, join the Dapr Discord to connect with thousands of Dapr users.

More blog posts

Building Production-Ready AI Agents: What Your Framework Needs

LLMs fall short in real-world business applications due to limitations in direction, memory, real-world access, and domain depth. Agents layer instruction management, memory handling, tool integration, and domain specialization on top of LLMs to help developers build scalable, production-ready agentic systems without sacrificing flexibility or operational control.