Go with the (Work)Flow: AI-Driven Code Generation for Workflows

Workflows are at the heart of modern, cloud-native systems. They coordinate long-running business processes, enforce durability, and provide resilience. But building them by hand is slow, repetitive, and error-prone.

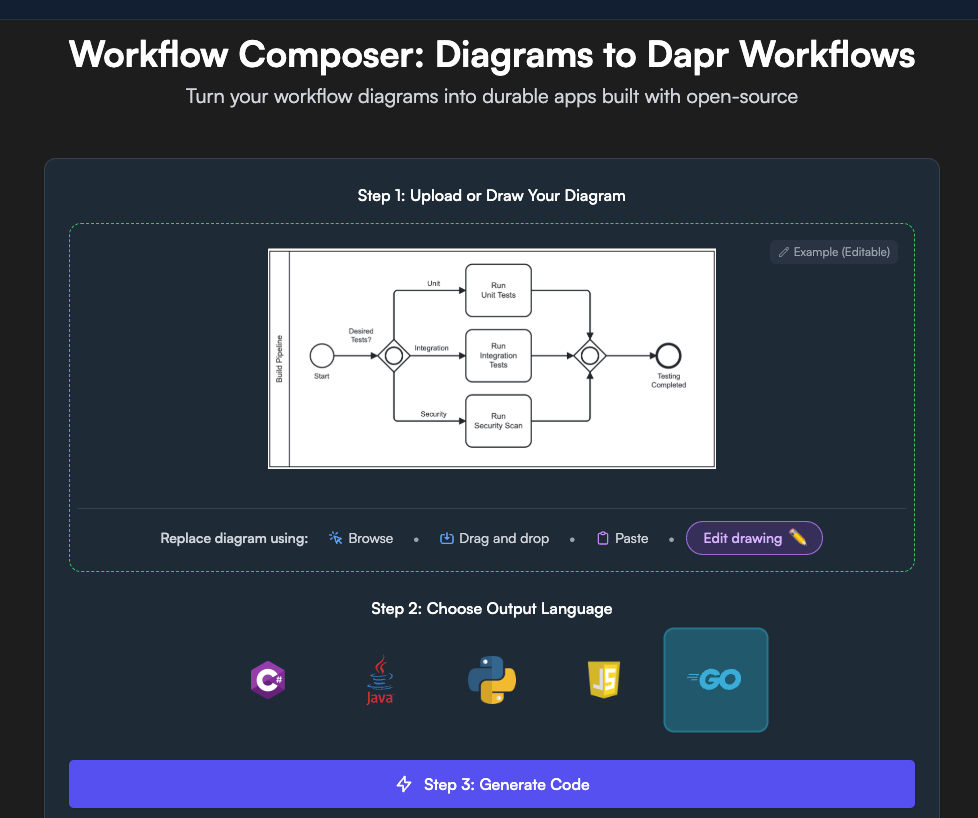

This post walks through how we designed Workflow Composer, a system that uses AI to transform workflow diagrams into ready-to-use Dapr Workflow applications for C#, Java, Python, Javascript, and Go. The architecture is built around the following key ideas:

- An Intermediate Representation (IR) for workflow logic

- A graph Visitor pattern that generates type-safe Go code

- Code generation for creating the output project code

The Challenge: From Diagrams to Durable Code

Traditionally, implementing a workflow looks like this:

- Take a diagram (BPMN, flowchart, etc.)

- Manually translate nodes into workflow functions

- Add retry, compensation, and durable execution logic

- Ensure the workflow follows best practices and is functionally correct

- Add in observability insights such as metrics or tracing for debugging

That process can take weeks for complex workflows. We wanted a system that could:

- Automatically generate type-safe, idiomatic workflows

- Support C#, Java, Python, Javascript, and Go workflow applications (the languages supported by Dapr SDKs)

- Support patterns like parallel execution, branching, timers, and child workflows

- Guarantee Dapr Workflow determinism out of the box

- Produce a ready-to-use project

Workflow Composer: AI + Go + Dapr

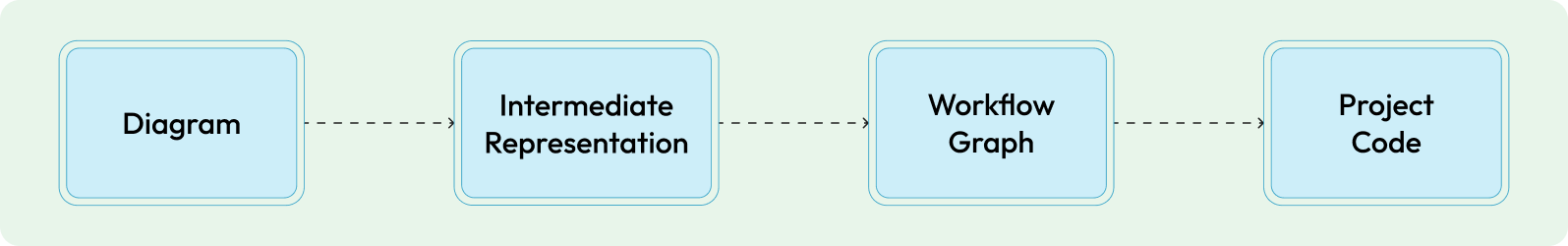

Workflow Composer bridges this gap. Instead of jumping from a diagram straight to code, it passes through an Intermediate Representation (IR). This is to alleviate LLM hallucinations, inaccuracies, and uncompilable code.

The flow looks like this:

We leveraged a Go-powered backend because many of us at Diagrid are Go developers. This meant we could use our language of choice to accept an input diagram, and pass it in, alongside our prompt, to an Anthropic Sonnet model using an Anthropic Go SDK to generate an intermediate representation of the input diagram. Let’s dive in a bit more in-depth here.

Stage 1: AI-Powered Diagram Analysis → IR

When you provide an input diagram, we pass that image with our system prompt to the Anthropic Sonnet model that we have our Anthropic Go SDK configured to use. Our prompt is defined to extract the workflow’s structure and objects into an IR.

We chose to use an LLM for diagram interpretation for a few reasons.

- Universal image processing: Unlike traditional computer vision approaches that require specific parsers for each diagram format (BPMN, Excalidraw, hand-drawn sketches, Mermaid, etc.), LLMs can understand visual elements across all these formats. They can recognize workflow patterns, shapes, and connections regardless of the original tool used to create the diagram which took some of the engineering load off of us.

- Semantic understanding: LLMs excel at understanding context and meaning, not just visual patterns. They can distinguish between a database icon that's part of the workflow logic versus one that's just contextual information. This semantic understanding is crucial for generating meaningful workflow code.

- Early error handling: Our prompt includes sophisticated error handling and validation. If a diagram isn't a workflow, is too complex, or contains ambiguous elements, the LLM can provide specific feedback to help users fix their diagrams. We also added things like activity count restrictions to keep cost within reason.

- Streamable output: We use a JSON Lines format where each line is a complete JSON object. This allows us to process large diagrams without hitting token or memory limits, and each element can be validated independently and users get early feedback on processing.

With our implementation, we are able to represent all diagram types into a uniform structure, regardless of the input format, so all workflow diagrams can represent the same fundamental concepts and we have a consistent format to build from:

- Activities: small units of task within the workflow

- Gateways: branches and joins

- Participants: roles or systems performing work

- Data objects: how information moves within the workflow

- Edges: control flow connections between elements

- Start/End nodes: workflow boundaries

Our IR captures these concepts in a language-agnostic format that works for any diagram type - see the following IR JSON line for reference:

{"__type": "activity",

"id": "get_talk_accepted",

"type": "wait_for_event",

"participant": "presenter",

"description": "Waits for talk acceptance notification",

"data_objects_flow": [],

"events": [{"event_type": "message", "name": "TalkAccepted", "event_label": "talk_acceptance_received", "next": "gw_celebration_decision"}],

"asynchronous": true,

"multi_instance_type": null,

"confidence": "high"

}Stage 2: Graph Construction

From the IR, we build a workflow graph that translates abstract workflow concepts into concrete, executable structures.

The IR-to-graph conversion process involves several steps:

- Node creation: each IR element becomes a typed node in the graph:

- Activity nodes represent tasks to be completed

- Gateway nodes handle branching logic (exclusive, inclusive, parallel, event-based)

- Start/end nodes: define the workflow boundaries for traversal

- Convergence points: nodes where multiple execution paths meet

- Edge construction: control flow connections between nodes:

- Sequential flow: direct execution paths between activities that are synchronous

- Conditional flow: branching based on gateway decisions

- Parallel flow: concurrent execution paths

- Event flow: asynchronous event-driven transitions

- Topological sorting: Ensures correct execution order by analyzing dependencies and creating a deterministic sequence that respects all workflow constraints.

Our graph construction process was fairly involved as you can tell with the various steps, node types, and edge construction; however, it ensured that complex patterns were made more explicit and manageable so we could safely create/traverse a complete and deterministic graph:

- Convergence Logic: When multiple execution paths merge (like after parallel branches), our system:

- Identifies true convergence points

- Creates separate functions for convergence logic to avoid code duplication

- Ensures proper synchronization of parallel branches

- Parallel Execution: For parallel gateways, our system:

- Detects diverging parallel gateways and their corresponding convergence points

- Generates concurrent execution code using language-specific primitives

- Handles synchronization to ensure all/any parallel branches complete before proceeding

- Loop Handling: For iterative patterns, our system:

- Identifies loop sources and targets

- Distinguishes between loop back edges and true convergence points

- Generates appropriate loop control structures

- Only supports loops back to the beginning for our first take on this feature support to support workflows to continue as new. This was a complex addition to the solution, so we started with an initial effort on it for our first pass.

Before we generate any code, our graph data structures would go through a series of validation checks to ensure that the foundation is correct to build out a project with. For this, we validate the graph connectivity, ensuring all nodes are reachable and connected. We verified that there were no dead-ends except for workflow completion and our end node. We prevent unnecessary infinite loops, and verify that all gateways have proper inbound/outbound edges. Validation of the graph was critical and we leveraged the AST Go package to have a ton of unit tests on this that we heavily relied upon.

Stage 3: Code Generation with the Visitor Pattern

The validated graph is then traversed using a graph Visitor implementation in our Go backend. The visitor pattern is a design pattern that allows you to separate an algorithm from the object structure that it operates on. The object structure being the workflow graph, and the algorithm being the visitor responsible for graph traversal logic and code generation based on the graph traversal.

The visitor pattern translates the abstract graph into concrete, executable workflow constructs in the appropriate language of choice:

- Dapr Workflow Activities: Each activity node becomes a callable workflow activity with:

- Proper input/output parameter handling

- Retry configuration

- Error handling

- Control Flow Structures: Gateways translate into:

- Conditional statements (if/else, switch) for exclusive gateways

- Parallel execution primitives for parallel gateways

- Events to invoke for event-based gateways

- Loop constructs for iterative patterns

- State Management: The visitor ensures:

- Proper workflow state initialization

- Context passing between activities

- State persistence for long-running workflows

- Deterministic execution guarantees

Once we had our workflow constructs properly set up and we were traversing the graph to build our resulting project code, we had to leverage language-specific code generators through a language generator interface that each concrete language type implemented with its corresponding language workflow code. This kept our separate language/SDK code uniform in feature implementation abiding by the interface, kept our code as clean as could be, and allowed us to include each language's best practices and idioms. This was a challenging aspect of our solution to support C#, Java, Python, Javascript, and Go workflow applications within a single backend without errors. It required extensive research on our end to familiarize ourselves with these other languages.

We also used the embed Go package to embed in static content such as README.md files, Makefiles, and static configuration files to round the resulting project out. After building static content, we would then go through and fill in the dynamic content leveraging our concrete language type generator to generate the workflow code.

For Go, this generator produces idiomatic code with:

- Correct Go module structure (go.mod, main.go)

- Dapr Workflow SDK imports and Dapr client calls

- Strong typing with structs and models

- Proper Go-style error handling (if err != nil) and logging

Overall, the visitor pattern ensures that the generated code follows language-specific conventions while maintaining the same workflow logic across all supported languages (C#, Java, Python, JavaScript, and Go).

Stage 4: Complete Project Code Generation

The output isn’t a single file—it’s a ready-to-run project with everything wired up:

├── 🛠 Makefile

├── 📷 diagram_image.png

├── 📄 README.md

├── 🧩 components/

│ ├── pubsub.yaml

│ └── statestore.yaml

├── 🧠 workflow/

│ ├── activities.go

│ ├── activity_model.go

│ ├── simple_logger.go

│ ├── workflow.go

│ ├── workflow_data.go

│ └── workflow_result.go

├── 🌐 workflow-jetbrains.http (.http request file for JetBrains IDEs)

└── 🌐 workflow-vscode.http (.http request file for VS Code)

Developers can immediately build, run, and extend the project, customizing it to their business use case and data models.

Results

Unlike other AI code generators that often produce code with syntax errors, type mismatches, or runtime failures, Workflow Composer is intentionally designed to ensure every generated workflow compiles correctly and runs safely. This is achieved through much of what we’ve covered in the blog:

- Structured IR Validation: The JSON Lines IR format includes comprehensive validation rules that catch structural issues before code generation begins

- Graph-Based Type Inference: The workflow graph construction process analyzes data flow and ensures type consistency across all activities and gateways

- Language-Specific Type Systems: Each visitor implementation enforces the target language's type system, generating strongly-typed structs, interfaces, and function signatures

- Deterministic Code Generation: The visitor pattern ensures consistent, predictable code structure that follows language idioms and best practices

- Compile-Time Error Prevention: The system validates workflow logic, gateway conditions, and data flow at graph construction time, preventing runtime errors

Overall, our system handles complex workflow patterns including parallel execution, error handling, compensation logic, and long-running processes with the same reliability as hand-written code, but completed in a fraction of the time and without the risk of human error. This architectural approach ensures that Workflow Composer doesn't just generate code—it generates ready-to-use, type-safe, and immediately usable workflow applications that meet enterprise standards for reliability and maintainability, and that can be further customized to meet the needs of the business use case.

This approach has yielded significant benefits that work to differentiate Workflow Composer from other AI code generation solutions:

- Development time reduced from weeks → minutes

- Type safety + compilation guaranteed by design

- Ready-to-use: output projects run with Dapr immediately

Looking Ahead

Workflow Composer is just the start. Future enhancements include:

- AI-assisted generation of activity logic

- Updates to support the latest with the upcoming Dapr 1.16 release

- Integration with Dapr Agents for LLM-driven autonomous workflows

We’ve already made strides on further enhancements such as a code prettification feature that we have deployed in our development environment at the moment. This allows us to test the feature before we ship it, and works to make the resulting project code more customized to the data objects and use case(s) supplied. This is only supported on Go currently, as we typically add new features to this language first so we can test things out with the language we are most comfortable with before rolling out to the other languages we support.

Stay tuned for more exciting updates and enhancements for Workflow Composer!

Conclusion

By combining AI-powered diagram analysis with Go’s strong typing and Dapr Workflow’s durability, we’ve created a system that closes the gap between design and implementation for languages like C#, Java, Python, Javascript, and Go.

With Workflow Composer, you can go from diagram → ready-to-use workflow projects in minutes, while following best practices for cloud-native resilience and fault-tolerance.

This is just the beginning! In the upcoming 1.17 release, we’re adding powerful new capabilities to Dapr Workflows, including the ability to rerun a workflow from a specific point in history, support for cross-language activity calls, and scenarios where a workflow can be hosted in one application while calling activities or even entire workflows in another. We’re also introducing workflow versioning to make long-lived workflow management easier. Finally, Dapr Agents, built on top of Dapr Workflows, extends these foundations to deliver reliability and durability for agentic applications.

👉 Try it here: Workflow Composer

More blog posts

Understanding Dapr Actors for Scalable Workflows and AI Agents

Dapr’s virtual actor model brings object-oriented simplicity to cloud-native, distributed systems. Learn what actors are, how Dapr Actors handle state, placement, timers, and failures, and when they’re the right tool for the job.

Local Development with Dapr PubSub and .NET Aspire

You’ve made the jump to .NET Aspire, and now you need to ensure this great experience results in production ready systems. Let’s catch up on the current state of .NET Aspire and Dapr with a sample project that makes use of the pubsub building block.

Making Agent-to-Agent (A2A) Communication Secure and Reliable with Dapr

The Agent-to-Agent (A2A) protocol enables agents to collaborate but lacks enterprise-grade security and reliability. Dapr bridges this gap with built-in mTLS, OAuth2/OIDC support, access control, secret management, and observability, making A2A secure, resilient, and production-ready across all languages and agent frameworks.