It has been over 2 decades now since we entered the world of microservices hitting production. Each service was “small”, autonomous, and ephemeral. But without a shared identity, every deployment looked the same to the network. Who was calling whom? Could this service be trusted? Developers solved it with static secrets and shared keys until security and compliance caught up, forcing the industry to invent workload identity; mTLS, SPIFFE, trust federation, and ultimately the service mesh.

AI agents are today’s microservices moment all over again.

Agents act autonomously, chain to other agents, and operate across APIs, clouds and model providers. The key difference between traditional microservices and agents is that agents don't just make pre-defined calls, they make decisions. But just like the old days before zero trust in microservices, we’re handing them static API keys and long-lived credentials.

We don’t yet have:

- A consistent way to identify an agent instance

- A way to verify which human or workload delegated authority to the agent

- Short-lived credentials tied to runtime agent identity

- Cross-cloud federation and auditability in the context of the agent

The result? Exactly the same kind of credential sprawl that early microservice adopters experienced.

Just as runtimes such as service mesh, open standards that were adopted, and knowledge about zero-trust got us to a much better world with microservice architectures, we now need to do the same with agents; a foundational identity and policy layer. The problem is not new, but the framework for applying identity to agents remains to be established.

What AI Agents Actually Are

There is a lot of mythology around AI agents: emergent SkyNet-esque personalities, autonomous human-like reasoning, self-directed stamp-collecting barons. But most production-deployed agents today follow the same pattern: deterministic for-loops calling language models and invoking tools.

At its core, an AI agent is software following the same business workflow loop we have been using to implement deterministic operators for decades:

- Get current state: Collect context (input, system state, environment)

- Derive desired state: Use an LLM to select the next action(s)

- Reconcile action: Execute a tool or API call

- Reconcile state: Inspect the result, update state

- GOTO 1

Agents are for-loops, following the classical deterministic operator feedback loop model you see from Kubernetes controllers to embedded thermostats. The “magic” of agents is the bit in the middle where they use an LLM to derive the desired state and make decisions on which action(s) to take.

Tools: Where Agents Actually Do Work

LLMs don’t manipulate the real world, they emit instructions. It's tools in the reconciling action step that translate the model's intent into real-world behaviour. These tools can be anything from telling the time, to database queries, to full cloud resource operations; maybe even to collect stamps. Tools represent the point of highest risk to access privilege, dollar cost, and attack vector- tools are the piece where identity matters the most. They require authentication, authorization and auditability, just like the micro-services of today.

MCP Servers: Standardizing the Agent-to-Tool Boundary

Under this paradigm, it becomes immediately obvious that there needs to be some kind of standardization for discoverability and sharing structured schemas. This is where the classic protocol & abstraction layers come in, this time with the apt name Model Context Protocol (MCP). They're a server (local or remote) that provides the set, schemas & parameter signatures of tools which are available to the LLM, deriving the desired state and actions to get there.

While MCP servers provide a clear security boundary, that doesn't solve identity in-itself. It assumes there is already a trustworthy entity that can sign tokens, assign scopes, and validate callers. Right now, teams fake this with API keys, static tokens, or trust-by-configuration, exactly how early microservices did before workflow identity matured.

Code-First Workflows

Beyond the marketing hype, most real-world AI systems are not “fully autonomous agents.” They are code-first workflows with pockets of statistical reasoning injected at key decision points.

The architecture looks like this:

deterministic workflow

→ ask LLM which step to run

→ execute step (tool)

→ check result

→ maybe ask LLM againThis is an engineering pattern whereby humans define the workflow structure, models provide judgement, heuristics and interpretation, and the code implements the predictable system behavior.

- “Complete the code for this function”

- “Pick the right prompt template and tool for this holiday request”

- “Generate SQL, validate it, and run it if safe”

This is fundamentally a workflow system with AI in the middle.

Rotate your Password Please

Because real agents live inside real software stacks, they inherit all the messy problems of distributed systems:

- Authentication

- Authorization

- Secret rotation

- Least privilege

- Governance

- Auditability

But they add new problems:

- Agents can replicate themselves

- Agents chain into other agents

- Agents can inherit or escalate privileges through these chains

- Agents may make security actions based on probabilistic decisions

- Agents run in distributed, sometimes disparate environments and boundaries

Identities are required for not just the agent, but also for the actions the agent performs, across tools, clouds, and other agents. When an LLM decides to call a tool or an MCP server executes an operation, someone must own that decision. The human author, the agent, the workflow, the runtime, who? Today, that answer is often “whatever API key was in the environment variable at the time.” This is precisely the moment microservices were in before workload identity.

Where We Are Going

As the technology cycle accelerates, early adopters are deploying agents into production, and major vendors are starting to define formal identity concepts and protocols for agents.

- Microsoft Entra now includes agent identity as first-class objects

- CyberArk is defining lifecycle and revocation models for agent identities

- Okta and the Cloud Security Alliance are publishing IAM frameworks for agents

- The OpenID Foundation is outlining governance and identity standards for agentic systems

In parallel, several trends are becoming clear:

- MCP servers are beginning to rely on OAuth and OIDC for authorization

- Static API keys are being replaced by runtime asserted identities

- Broad permissions are being split into fine-grained, short-lived scopes

- Governance is asking for auditable chains of authority

We are rebuilding the identity and security foundations that microservices required, but now for AI systems rather than RPC-based ones.

Failure Modes

As soon as AI agents begin to act on real systems, data, and cloud resources, familiar security problems prop up, but because agents combine deterministic code with probabilistic decision making, these problems occur in more subtle and harder-to-diagnose ways. Almost every failure mode shares a common root cause: the absence of reliable agent identity and clear delegation boundaries.

Privilege Escalation

Agents call other agents or invoke sub-agents created dynamically at runtime. Without a verifiable identity for each agent instance, it becomes impossible to determine which privileges were inherited or delegated. This creates a situation where sub-agents accidentally or intentionally operate with more privilege than the original delegator intended. The lack of scoped identity and structured delegation is the fundamental cause.

Misuse of Tools

Tool operations can carry dollar, security, or availability risks. For example, the ability to create cloud resources, modify config & code, or manipulate customer data. If an agent selects the wrong tool or supplies incorrect parameters, the blast radius can be severe. Without actionable identity tied to each tool call, there is no way to enforce the principle of least privilege or to know who initiated the action.

Jail Breaking

Agents use language models to decide what to do next. Notoriously, models hallucinate, infer incorrectly, or their human language firewalls completely break (oft named jail breaking). This means an agent might request an operation that no human anticipated or could even perceive. If the identity and authorization system cannot enforce strict boundaries, the LLM becomes a de facto policy engine. This creates the dangerous class of failure modes where intent is probabilistic, but the executed action is real.

Agent Who?

Agents act on behalf of a user, a workload, a workflow, or another agent. Without a clear delegation relationship, there is no accountability. This results in the problem where an action is taken under the wrong identity or against the wrong resource. Without traceable delegation, incident response and post-mortem become impossible.

Secret Sprawl

Because most agents today lack runtime asserted identity, developers fall back to environment variables and static credentials. These secrets leak through logs, prompts, model interactions, or multi-agent chains. When the credentials of one agent are copied into another, privileged access becomes untraceable and irreversible. This problem mirrors the early microservices era, and identity is the necessary correction.

Provenance

The growing focus on AI safety and governance comes from a simple question: who did what, when, and under what authority. With static API keys or agents without identity, that question has no answer. Without signed, structured provenance, it becomes impossible to audit, reason about, or validate AI decisions and actions. This is already a compliance risk for the regulated industries.

In every case, the root problem is the absence of a strong and verifiable identity for agents, and a lack of explicit delegation and scoped authorization for every tool invocation.

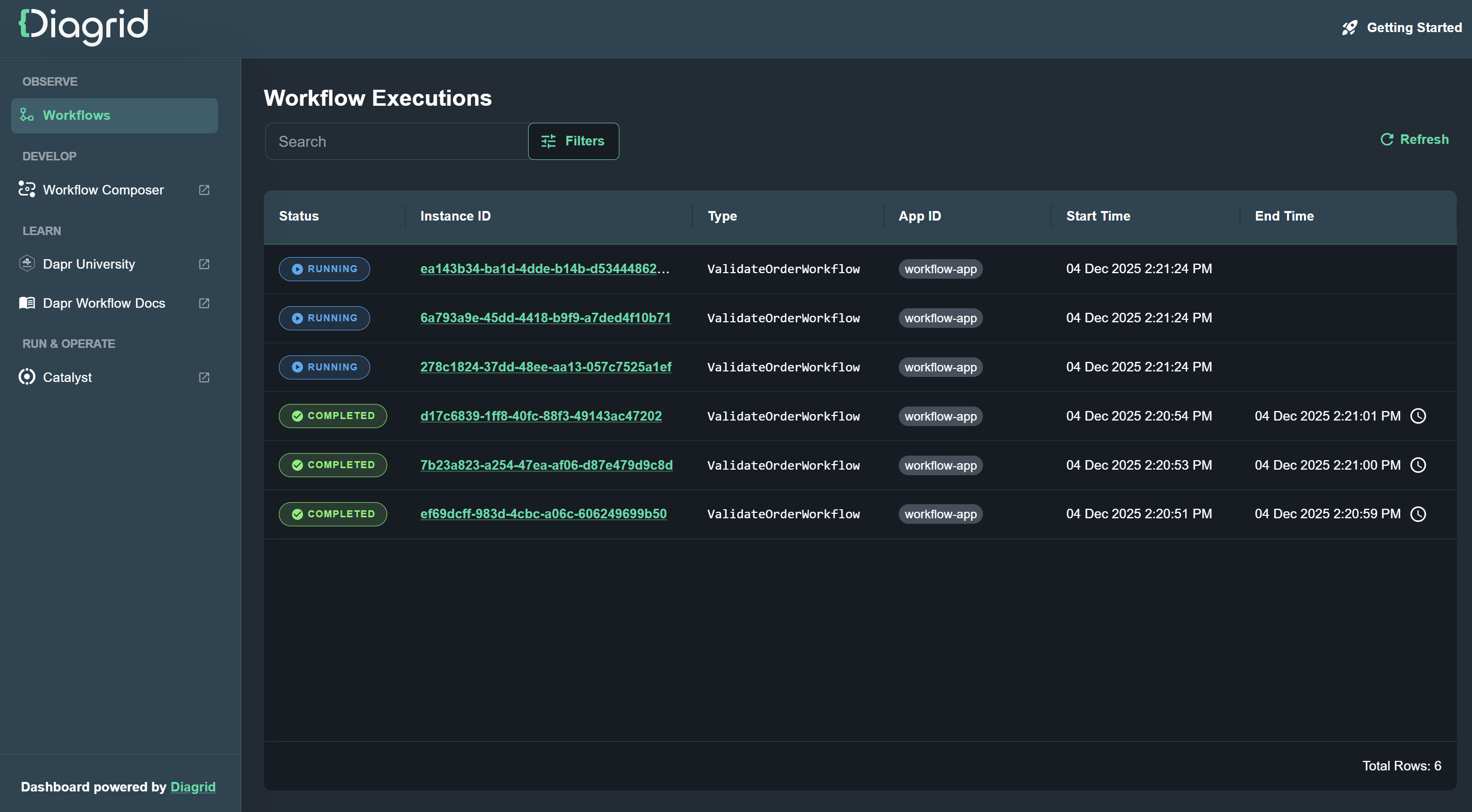

Bringing Identity to Agents Identity with Diagrid Catalyst

The challenges outlined above are not theoretical. They are the direct result of running probabilistic decision-making inside real distributed systems without a first-class identity and policy layer. At Diagrid, we see AI agents not as a new category of magic software, but as a natural evolution of workflows, systems we already know how to secure, govern, and operate at scale. This is exactly where Catalyst comes in.

In the part 2 of this blog post we will explore how Catalyst provides the foundational identity layer for AI agents, and how Workflows provide the execution context to make decisions of access.

—

Summary

AI agents face the same identity crisis that microservices experienced two decades ago, relying on static API keys instead of proper workload identity. While agents are essentially deterministic workflows with LLM-driven decision points, their lack of runtime-asserted identities creates severe security risks including privilege escalation, credential sprawl, and accountability gaps. The industry is now developing identity standards similar to those that solved microservices' security challenges.

Interested in building production-grade agentic systems? Check out Dapr Agents, a python framework for building durable and resilient AI agent systems built on top of Dapr. If you want to run your agents reliably in production, try Diagrid Catalyst, the enterprise platform for durable workflows and agentic AI.

.jpg)